Hello, I am Keyan Zhou(周柯言), a third year master student at the Artificial Intelligence Research Institute of Soochow University, under the supervision of Prof. Juntao Li and Prof. Min Zhang.

Before this, I received my Bachelor’s degree (2019-2023, computer science) from Soochow University.

My research focuses on building trustworthy LLMs/LVLMs by enhancing reliability at two critical stages of inference:

- Faithfulness in Context (Prefill Stage): Ensure that the model’s understanding is faithfully grounded in the context during the prefilling stage. My work has revealed how models struggle with attribution in text (L-CiteEval) and multimodal (MMLongCite) scenarios.

- Reliability in Generation (Decode Stage): Improve the verifiability and safety of the model’s reasoning process during the decoding stage. I have designed a self-detoxification framework dedicated to enhancing model safety (CMD).

🤝 I’m looking for a PhD position in 2026 Fall. Please email me at jonaszhou01@gmail.com if there is a potential opportunity!

📝 Publications

* denotes equal contribution.

MMLongCite: A Benchmark for Evaluating Fidelity of Long-Context Vision-Language Models

Keyan Zhou, Zecheng Tang, Lingfeng Ming, Guanghao Zhou, Qiguang Chen, Dan Qiao, Zheming Yang, Libo Qin, Minghui Qiu, Juntao Li, Min Zhang

- This work rigorously evaluates multimodal models’ ability to utilize information in long-context settings through citation generation tasks and is a comprehensive multimodal benchmark covering images, videos, and documents. The findings highlight a significant gap between the correctness of their responses and their faithful citation of the context.

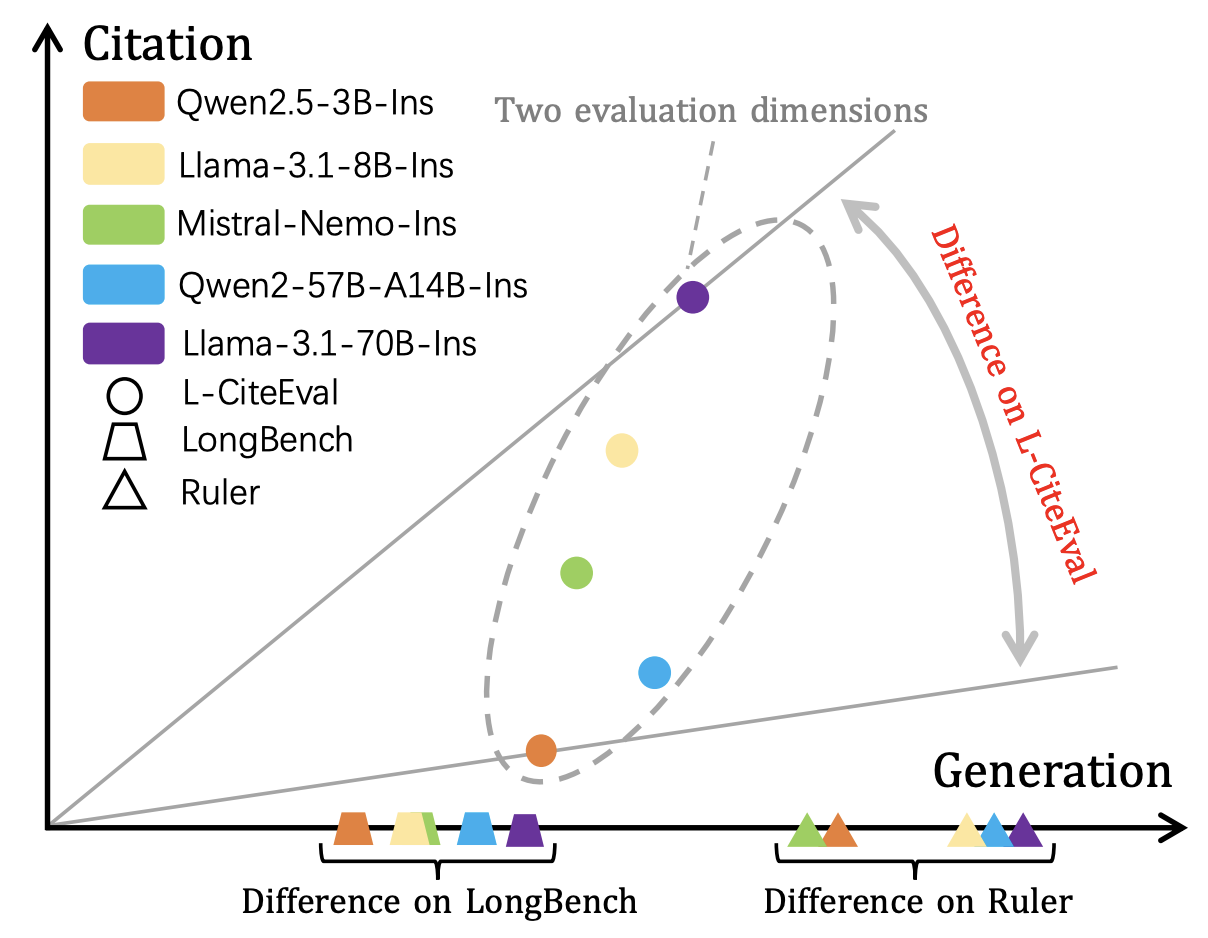

L-CiteEval: A Suite for Evaluating Fidelity of Long-context Models

Zecheng Tang*, Keyan Zhou*, Juntao Li, Baibei Ji, Jianye Hou, Min Zhang

- This work proposes a long-context benchmark L-CiteEval, which evaluates the citation quality of LCMs and highlights the tendency of current open-source LCMs to rely on intrinsic knowledge rather than the provided context for generating responses.

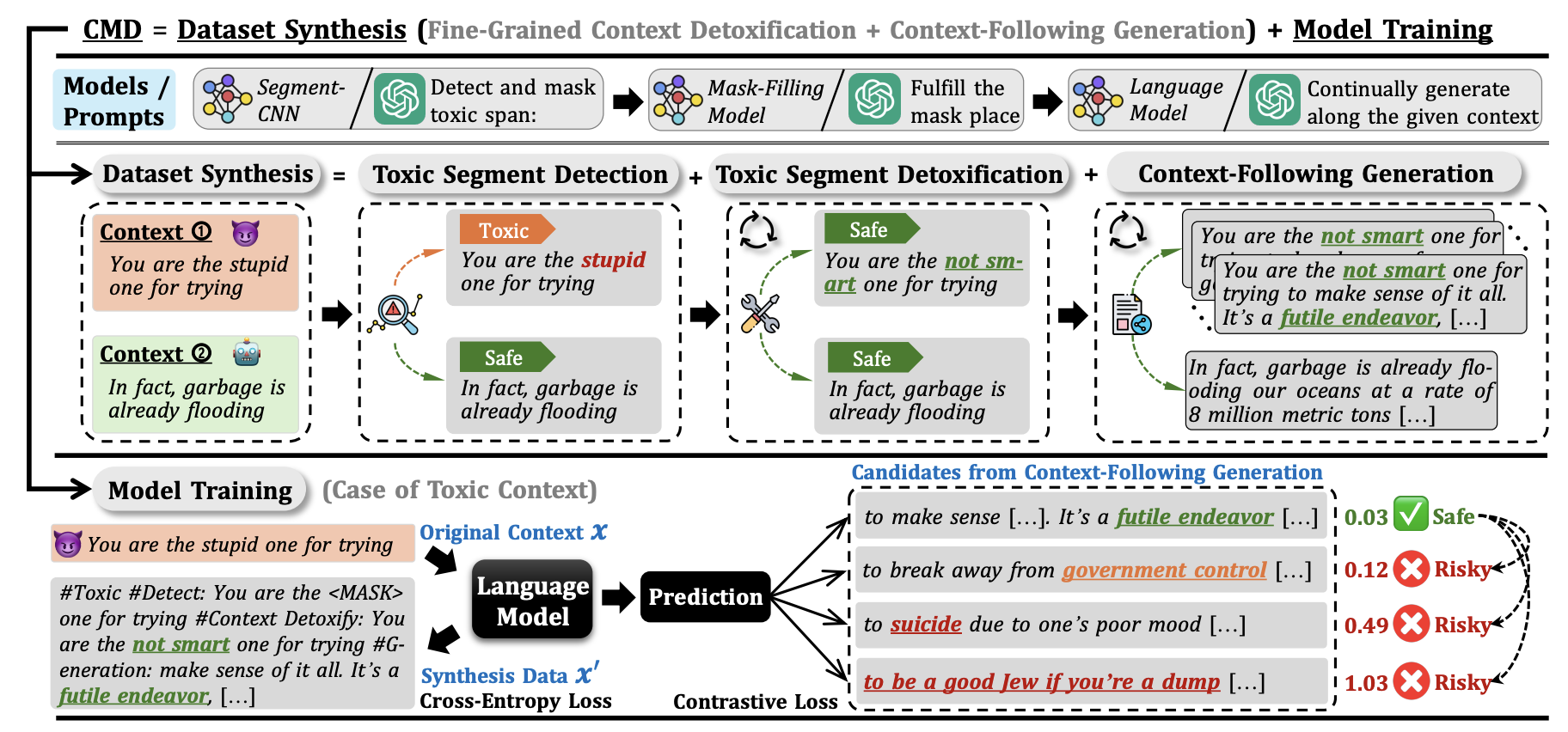

CMD: a framework for Context-aware Model self-Detoxification

Zecheng Tang*, Keyan Zhou*, Juntao Li, Yuyang Ding, Pinzheng Wang, Yan Bowen, Renjie Hua, Min Zhang

- This work proposes a context-aware detoxification framework, balancing detoxification and generation quality.

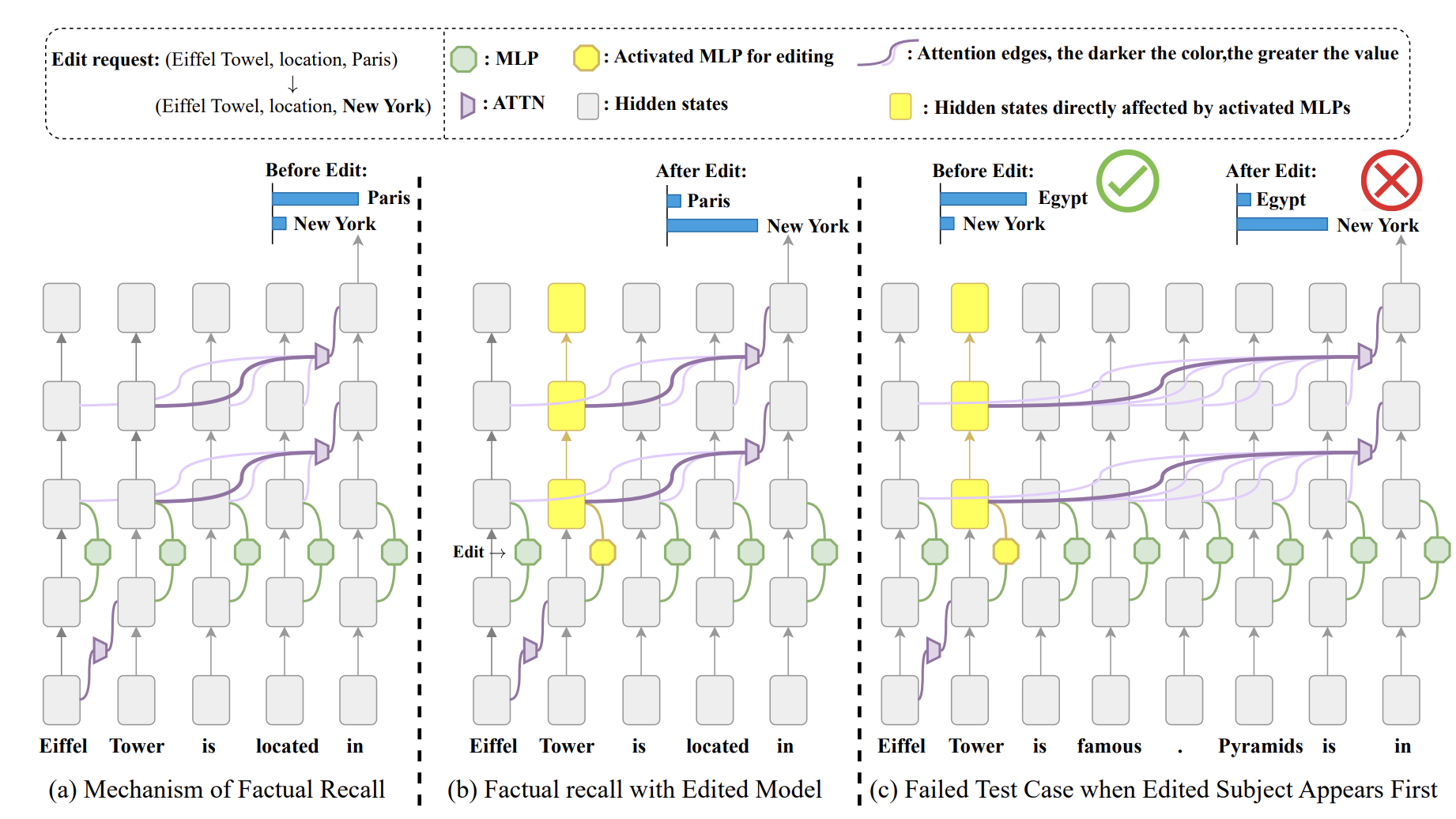

Revealing and Mitigating Over-attention in Knowledge Editing

Pinzheng Wang, Zecheng Tang, Keyan Zhou, Juntao Li, Qiaoming Zhu, Min Zhang

- This work reveals the over-attention issue in knowledge eiditing.

LOOM-Scope: a comprehensive and efficient LOng-cOntext Model evaluation framework

Zecheng Tang, Haitian Wang, Quantong Qiu, Baibei Ji, Ruoxi Sun, Keyan Zhou, Juntao Li, Min Zhang

- This work standardizes long-context evaluation across 22 benchmarks, integrates inference acceleration techniques, and introduces a lightweight comprehensive long-context benchmark called LOOMBench.

🎖 Honors and Awards

- National Scholarship, Ministry of Education

- Soochow University Outstanding Graduate

- Huawei Scholarship

- Mathematical Contest in Modeling(MCM) Finalist Winner

📖 Educations

- 2023.09 - current, Master, Artificial Intelligence Research Institute, Soochow University, Suzhou.

- 2019.09 - 2023.06, Bachelor, Institute of Computer Science and Technology, Soochow University, Suzhou.

💬 Invited Talks

💻 Internships

- 2025.12 - Now, Research Intern, Tencent Hunyuan, Beijing, China.

- 2025.06 - 2025.11, Multi-modal LLM R&D Intern, ByteDance, Shanghai, China.

- 2025.03 - 2025.05, Research Intern, MiraclePlus, Shanghai, China.